Centralized Log Analysis

Objective

Operators should be able to view logs from all the VOLTHA components as well as from whitebox OLT devices in a single stream.

Solution Approach For VOLTHA Ecosystem

The solution we have chosen EFK (elasticsearch, kibana and fluentd-elasticsearch) setup for voltha enables the Operator to push logs from all VOLTHA components.

To deploy VOLTHA with the EFK stack follow the paraghraph Support-for-logging-and-tracing-(optional) in the voltha-helm-charts README.

This will deploy Efk stack with a single node elasticsearch and kibana instance will be deployed and a fluentd-elasticsearch pod will be deployed on each node that allows workloads to be scheduled.

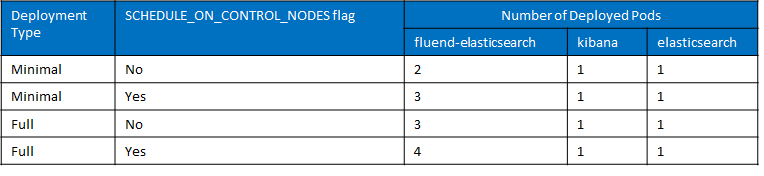

The number of deployed Pods will be dependent on the value of Deployment Type and SCHEDULE_ON_CONTROL_NODES flag as shown in the below table.

To start using Kibana, In your browser ,navigate to http://<k8s_node_ip>:<exposed_port>. Then you can search for events in the Discover section.

Solution Approach For Whitebox OLT Device

The solution approach we have chosen is to install td-agent (fluentd variant) directly on OLT device for capturing and transmitting logs to elasticsearch pod running in voltha cluster.

Created custom td-agent configuration file to handle the format of involved log files using right input plugins for openolt process, dev mgmt daemon, system logs and elasticsearch output plugin.You can find custom td-agent configuration file in *https://github.com/opencord/openolt/tree/master/logConf* and find installation steps in *https://github.com/opencord/openolt/tree/master* README.

Log Collection from VOLTHA Ecosystem and Whitebox OLT Device

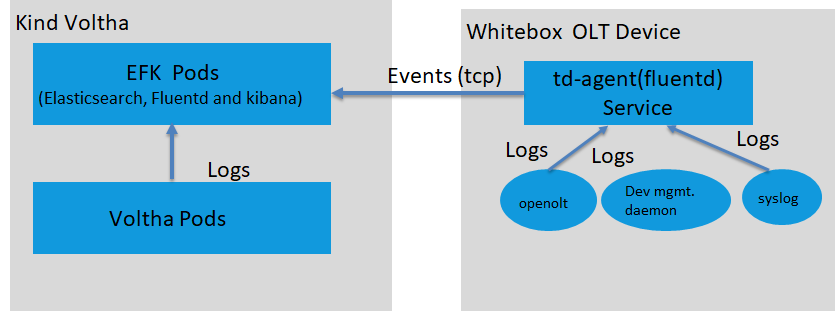

Below diagram depicts the log collection from voltha components and whitebox OLT device through EFK.The fluentd pod running collects logs from all the voltha components and push to elasticsearch pod. The td-agent(fluentd variant) service running on whitebox OLT device capture the logs from openolt agent process, device mgmt daemon process and system logs and transmits the logs to the elasticsearch pod running in voltha cluster over tcp protocol.

Secure EFK setup and transport of Logs from OLT device

The Operator can enhance the setup by making configuration changes with the requirement.

The Authentication, Authorization, and Security features for EFK can be enabled via X-Pack plugin and Role Based Access Control (RBAC) in Elasticsearch.The transmission of logs from the Whitebox OLT device can be secured by enabling tls/ssl encryption with EFK setup and td-agent.Refer following link for Security features. *https://www.elastic.co/guide/en/elasticsearch/reference/current/elasticsearch-security.html*

To enable TLS/SSL encryption for elasticsearch pod refer the following link

*helm-charts::elasticsearch/examples/security*

To enable TLS/SSL encryption for kibana pod refer the following link

*https://github.com/elastic/helm-charts/tree/master/kibana/examples/security*

To enable TLS/SSL encryption for fluentd pod and td-agent service refer following link

*https://github.com/kiwigrid/helm-charts/tree/master/charts/fluentd-elasticsearch*

Note: create certs directory in /etc/td-agent on the OLT device and copy the elastic-ca.pem certificate.

Archive of Logs

There are various mechanisms available with EFK to save data.For example operators can use reporting feature to generate reports of saved search as CSV documents, that can be transferred to a support organization via email.You can save searches with time-boxed or with filtering the required fields then generate the report.To use reporting features refer the following link *https://www.elastic.co/guide/en/kibana/current/reporting-getting-started.html*

Note: By default a 10mb of CSV file can be generated.To generate > 10mb of file enable x-pack plugin and rbac.To generate larger files need to have a bigger cluster configuration for the elasticsearch pod.The java heap space,cpu and memory need to be increased with the CSV file size.